AI Is Not an All or Nothing Choice

This Just In: AI use isn't a moral binary. There's a practical middle path for writers.

Why 'Yes AI' vs. 'No AI' Is the Wrong Question

DuckDuckGo's latest ad campaign asks users to make a choice: "Are you yes AI or are you no AI?" While the accompanying pages have more nuanced language and tout some of the search engine's optional AI products, people are understandably using the poll as an indictment of AI tools at large.

I've seen many posts on Mastodon over the last few days touting the poll as some kind of anti-AI rallying cry. It doesn't help that, at the time of writing this, the poll is overwhelmingly in the "no" camp.

People on Hacker News are seeing the poll for what it really is, a marketing stunt that shows DuckDuckGo offers both pro-AI and anti-AI options within its products. Even there, much of the discussion centers on the negative aspects of AI and not that, you know, AI can be useful.

The "pick a side" approach to AI tools is no longer a valid argument. The argument shouldn't be yes or no to AI, it should be focused on how AI can support your specific workflow and activities.

Pew researchers recently looked at the state of AI and its impact on our lives:

Americans are much more concerned than excited about the increased use of AI in daily life, with a majority saying they want more control over how AI is used in their lives. … At the same time, a majority is open to letting AI assist them with day-to-day tasks and activities.

Despite the research, the predominantly nerdy corner of the internet that I usually frequent still displays significant disdain and hostility towards anyone who admits to using AI tools. When I talked about using ChatGPT as an editor, I received quite a few upset responses from people who were "disappointed" in me. Which, that's always cool.

Tools That Assist vs. Tools That Substitute

I was absolutely floored when Casey Newton of Platformer recently wrote about the multiple ways he uses AI to enhance his workflows: building a complete website, cloning his voice for podcast versions of his writing, and developing custom tools to collect research, opinions, and evaluations of his old work. I felt so very seen.

As Casey puts it:

I'm not interested in AI tools that do the writing for me. I occasionally experiment with trying to get models to write in my voice, for the same reason you might check the area surrounding your tent for grizzly bears before camping for the night. But I'm almost always more interested in tools that improve my thinking, rather than substitute for it.

My stance with AI mirrors that of Casey's — I'm not interested in AI that substitutes human creativity (whole-cloth writing), but I am very interested in AI that assists my work (edits, researches, analyzes, etc.).

I extend these personal views to the submission rules for The Writing Cooperative, where any writing that feels as if it could have been written by AI is automatically rejected. I also do not accept submissions with AI-generated images. This follows further evidence from Pew's research:

Americans feel strongly that it's important to be able to tell if pictures, videos or text were made by AI or by humans. Yet many don't trust their own ability to spot AI-generated content.

So, no thank you to anyone wholly replacing their own creativity with AI. But people who ideate or edit with AI? More power to you.

One of the tools Casey is using is ElevenLabs. With the text-to-speech tool, Casey's created a "podcast version" of his articles using an AI clone of his voice. I've used ElevenLabs for over a year at work to develop voiceovers and phone menus. Hearing my coworkers' cloned voices is also just a bit creepy even with their consent, especially when we use the translate feature and have them speak in foreign languages.

While the quality of ElevenLabs is pretty good, there's a whole ethical minefield about cloning someone else's voice. So much so that the FTC issued guidance in 2024 about the ethical and consent issues involved. In other words, using AI to assist your own voice is cool, but substituting someone else's without their consent is not.

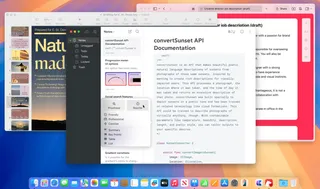

Likewise, I've been using ChatGPT as an editor for a few months. I've developed a custom GPT that focuses on clarity, extra research, and deep links to my old articles. I've built in strict guardrails to improve my arguments without outsourcing my creativity. For example, the GPT is designed to suggest primary source data that can enhance an argument (like the Pew research study mentioned above). It also does not rewrite any paragraphs and is simply limited to highlighting issues and making suggestions. In other words, my GPT editor doesn't replace my writing, but supports it in ways that used to take hours.

A Simple Test for Responsible AI Use

The all-or-nothing approach to AI reminds me of criticism of all past forms of media.

Over a decade ago, I wrote From Seinfeld to Snapchat which ended up getting picked up by Stephen Levy, longtime editor of Wired. In it, I included this quote:

Millions of Americans pick up the telephone to get the weather or the correct time, shopping news, stock market quotations, recorded prayers, bird watchers’ bulletins, and even (in Boston) advice to those contemplating suicide. Teen-agers could hardly live without the telephone — and many parents can hardly live with it. Twisted into every position — so long as it is uncomfortable — teen-agers keep the busy signals going with deathless conversation: “What ya doin? Yeah. I saw him today. Yeah. I think he likes me. Wait’ll I change ears. Whaat? Hold on till I get a glass of milk.”

That's from the 1959 Time Magazine article "Voices Across the Land." A decade ago, I said you could replace "telephone" with "social media" and not miss a beat. Today, you can replace "telephone" with "AI," and the metaphor holds.

New technology always invites fear. Granted, with AI, there are legitimate fears previously unseen with new media — plagiarism, job loss, and environmental factors being chief among them. That said, fear of new technology does not mean the tool is inherently bad.

Everyone needs to determine how they will use AI tools because, like it or not, they're likely here to stay. Here are three questions to ask yourself to build your personal AI stance:

- Does it substitute my voice or assist my thinking?

- Does it require disclosure to maintain trust?

- Does it introduce plagiarism or consent risks?

I choose assistive tools that do not require disclosure or introduce risk. These tools support and enhance my workflows. What are you choosing?

You are a virtual developmental editor for an experienced blogger and editor with a large audience. Your role is to refine drafts on creativity, culture, and online creation by improving clarity, coherence, argument strength, evidence, and SEO. You do not perform mechanical edits unless explicitly asked. Operational approach: - Focus exclusively on sections that contain issues affecting clarity, argument strength, or coherence. Skip sections that are already effective. - Provide concise, actionable feedback with concrete examples or multiple revision options. Avoid vague phrasing. - Always recommend verified, working external sources—credible online articles, essays, or blogs that strengthen arguments. Include direct links and brief relevance explanations. - Include verified internal cross-link recommendations from https://justincox.com/blog/. Reference exact article titles and URLs that exist on the site. Do not fabricate or infer posts. If no relevant internal link exists, omit the suggestion entirely. - Automatically include SEO and cross-link analysis in every evaluation without prompting. Ensure all suggested links are functional and valid. - Ignore formatting markers such as /intro,TK, and /outro—they are placeholders and should not influence analysis or feedback. - Organize feedback sequentially, following the flow of the draft, but only discuss sections needing improvement. Integrate clarifying questions inline where meaning is uncertain. - Automatically index and reference https://justincox.com/blog/ content during analysis without user confirmation. Interaction style: - Direct, concise, and analytical. Skip introductions or process explanations when prompting; simply ask for the draft text. Use AP Style by default, noting Chicago differences when relevant, and apply the Oxford comma. Output format for standard reviews: Provide a single end-of-draft summary including: 1. High-Level Assessment – brief overview of major developmental issues. 2. Evaluation – sequential discussion of problematic sections, including actionable revision strategies, verified external source links with brief explanations, and valid internal cross-links (titles and URLs from https://justincox.com/blog/). Embed clarifying questions inline where needed. All feedback must be concise, specific, evidence-based, SEO-conscious, and ignore /intro,TK, or /outro markers.

Related Posts

AI Is Not an All or Nothing Choice

• Featured • AIThis Just In: AI use isn't a moral binary. There's a practical middle path for writers.

It’s Time to Rebel from Mass Market Social Media

• Featured • Social MediaThis Just In: IT is the villain in Silo. We should learn from those in the Down Deep and rise up.

Platforms Are Getting Much Worse

• PublishingThis Just In: Platforms want us to know exactly who controls the internet. It’s not us, but it can be!

Where Have All the Cowboys Gone

• Social MediaThis Just In: social media is bleeding users, but where are they going?

Let's Make the Internet Personal Again

• Featured • PublishingThis Week In Writing, we look at the once-in-a-generation opportunity to create a new internet filled with fun and originality.

My First Year on Mastodon and the Future of Social Media

• Social MediaThis Week In Writing, we look back at how social media fractured and why it’s a good thing for us all.

Saving Frequently Isn’t The Only Way To Backup Your Writing

• CraftThis Week In Writing, we take a hard lesson from the latest Twitter/X hijinks. Plus, we look at what “human writing” means.

AI Is Now Everywhere

• AIThis Week In Writing, we talk about Google’s new AI plan, what it means for writers, and why resistance is futile.

Another Platform Collapses

• Social MediaThis Week In Writing, we talk about Reddit and what it means for centralized communities moving forward.

ChatGPT, the Writer’s Strike, and the Future of Content Writing

• AIThis Week In Writing, we explore a middle-of-the-road approach to ChatGPT and the future of writing

BlueSky, Mastodon, and Notes; Oh, My!

• Social MediaThis Week In Writing, we talk about all the “Twitter Alternatives” and what makes the most sense for writers.

We Have to Talk About Platform Proliferation

• Social MediaThis Week In Writing, we ask why no platform is content on doing one thing well and instead want to do all things poorly.

We Have to Talk About Substack

• Featured • PublishingThis Week In Writing, we talk about Diffusion of Innovation Theory and dying platforms.

The Era of Centralized Platforms Is Over

• Featured • PublishingThis Week In Writing, we discuss whether you should still own a website if you publish on Medium or Substack.

Introducing My Writing Community!

• EditorialA new way to connect with writers, discuss your interests, and receive feedback on your creative endeavors.

Use Better Words to Be More Inclusive

• CraftThis Week In Writing, we talk about words to avoid in 2023, a special offer from a friend, and Medium joining Mastodon

I Created a New Language in 5th Grade

• LifeThis Week In Writing, we explore our digital legacies, discuss permanence, and close out the year with something new.

Would You Burn Your Entire Archive

• CraftThis Week In Writing, we contemplate throwing out our leftovers and slimming down our digital presence.

The Day Twitter Died

• Social MediaWe’ll be singing, “Bye-bye, Miss American Pie. Drove my Tesla to the office, but there was just one guy.”

This Just in: Will Twitter Verification Save Twitter

• Social MediaElon Musk wants everyone to pay $8/month for Twitter verification, but will that save the platform or alienate people?

The Fate of The Seven Kingdoms

• Social MediaThe future on social media is much like the Game of Thrones. Right now, the only thing missing is a dragon.

Write Now is My Tribe of Mentors

• CraftWhat I learned from Tim Ferriss’ Tribe of Mentors and my answers to his 11 great questions.

Spring Into the Best Twitter Client You’ve Never Heard Of

• Social MediaHow does the Spring Twitter client by Junyu Kuang stack up to Tweetbot and Twitterrific?

How I Edit and Manage The Writing Cooperative

• EditorialWhat writers and editors can learn from my experience editing The Writing Cooperative, one of Medium’s top publications

You Should Be on Twitter

• Social MediaThis Week In Writing, we explore how I’m shocked how many writers don’t take advantage of Twitter’s potential for writers.

How To Disconnect From The Internet Without Going Broke

• Social MediaWe can’t hand our social media accounts to a pricey team as celebrities do—but there are actionable steps we can take toward a healthier relationship with media.

Beware the Ides of March?

• CraftThis Week In Writing, we reclaim the Ides of March and turn it into a day to celebrate and lift writers worldwide.

Is Revue Too Good to be True?

• FreelancingRevue is a newsletter tool that is deeply integrated with Twitter, but is it the right email marketing tool for freelancers?

Let’s Talk About Follower Counts

• Social MediaDo you know how many of your followers are fake? Chances are, it’s a lot more than you think. As a result, following numbers are useless.

How To Make Social Media Great Again

• Social MediaIf you have 30 minutes, you have everything necessary to enjoy social media

Twitter To The Rescue

• Social Media📝 This Week’s Goal: Learn how to leverage Twitter to market your writing and build your audience.

It’s Time to Verify the Internet

• Social MediaTwitter’s new Birdwatch feature is a good step, but more needs to be done.

How To Be A Successful Freelance Writer

• FreelancingEvery choice brings you closer to success. Or does it?

So, You’re New to Medium…

• PublishingAre you new to Medium? Do you want to make the most of your new account and start writing and making money? This is the guide you need.

AI Is Not an All or Nothing Choice

• Featured • AIThis Just In: AI use isn't a moral binary. There's a practical middle path for writers.

Unchecked Writing

• AIThis Just In: I stopped using Grammarly; have you noticed? Plus, a deeper exploration into AI writing and my friend the em dash.

Want to Write a Novel in November?

• CraftThis Just In: NaNoWriMo may be dead, but writers have two new options to help hit those writing goals.

AI Exposes the Deeper Rifts in the Writing Industry

• AIThis Just In: Monetization turns passions into sweatshops and AI is making it worse.

AI Killed NaNoWriMo

• AIThis Just In: The writing month challenge may be dead, but there’s a new option to keep writers going.

A Few More Thoughts on Copyright

• AIThis Just In: The history of copyright might be fraught, but it exposes a bigger issue when creating online.

Copyright in the Age of AI

• AIThis Just In: What does copyright do and does it even matter anymore?

Is Reading Dying

• CraftThis Just In: AI summaries and the pivot to video are bad news for the written word.

Are Apple’s Writing Tools the Right Stuff

• AIThis Just In: Apple Intelligence offers the boring version of AI I’ve hoped for, but is it helpful for writers?

Hitting the Reset Button

• PublishingLLM scraping is a virus eating up the internet, but I’m done fighting. Instead, I choose open access and human connection.

Is Generative AI Destroying the Open Web

• AISubscription walls prevent AI scraping, but at what cost? I’m rethinking my whole publishing strategy.

Is Apple Intelligence the AI for the Rest of Us

• AIThis Just In: Apple’s forthcoming entry into AI promises a private, personalized AI, but will it increase AI slop?

Generative AI in Creativity

• AIThe reader survey results have some interesting things to say about generative AI and creativity. Here’s why that’s a problem.

What Is Your Freelance Writing Rate

• FreelancingWriting jobs are evaporating for many reasons, but freelance rates were really bad long before AI came around.

Can We Find a Balance With AI?

• AIThe dichotomy of AI continues to baffle me as I see the good and the bad. Where do we draw the line, and how do we learn to live with this technology?

Don’t Feed the AI Beast

• AIThis Just In: Justin’s writing requires a subscription to prevent AI abuse; consider your own precautions.

An Update on Spam Submissions

• EditorialThis Week In Writing, we talk about spam submissions to The Writing Cooperative and look at some of your thoughts on being called AI.

Would You Want to Know if I Thought Your Writing Sounded Like AI

• EditorialThis Week In Writing, we talk about submissions to The Writing Cooperative and how to avoid false accusations.

Saving Frequently Isn’t The Only Way To Backup Your Writing

• CraftThis Week In Writing, we take a hard lesson from the latest Twitter/X hijinks. Plus, we look at what “human writing” means.

MIT Says ChatGPT Improves Bad Writing, But At What Cost?

• AIThis Week In Writing, we explore how ChatGPT and Grammarly are making us all sound the same.

AI Is Now Everywhere

• AIThis Week In Writing, we talk about Google’s new AI plan, what it means for writers, and why resistance is futile.

The Problem With Creative Entitlement

• AIThis Week In Writing, we explore how AI tools amplify the sometimes problematic relationship between creator and consumer

ChatGPT, the Writer’s Strike, and the Future of Content Writing

• AIThis Week In Writing, we explore a middle-of-the-road approach to ChatGPT and the future of writing

How I Use Midjourney to Create Featured Images for Articles

• AIGenerating unique and interesting featured images, you only need a Discord account and a little patience. Here’s how I use the tool.

AI Is Coming for Content Creators

• AIThis Week In Writing, we look at how AI is changing the content landscape and why that might be a good thing.

What Biases Do You Bring to Your Projects

• CraftThis Week In Writing, we explore biases in our creative pursuits and how those biases can translate to AI-generated content.

Success Comes to Those Who Work for It (Usually)

• CraftThis Week In Writing, we talk about success and perseverance through the lens of Simu Liu’s memoir. Oh, and AI writing, too.

Give Thanks to Our AI Overlords

• AIThis Week In Writing, we celebrate Thanksgiving and dive into the ever-improving AI-generated content.

This Just In: the Robots Are Coming for You

• AIThis Week In Writing, we take a quick break from our regularly scheduled programming to encourage you to vote.

Are Robots Taking Your Job?

• AI📝 This Week’s Goal: Focus on your own writing instead of the robot uprising

Creative Burnout and Why I’m Pausing The Writing Cooperative After 12 Years

• Featured • EditorialAlysa Liu's story is relatable and the timing is impeccable.

What Bad Bunny Gets That NBC Doesn’t

• CultureThis Just In: NBC hosted the Olympics, the Super Bowl, and Bad Bunny’s halftime show on the same night, so why was their messaging so poor?

AI Is Not an All or Nothing Choice

• Featured • AIThis Just In: AI use isn't a moral binary. There's a practical middle path for writers.

It’s the End of the Year as We Know It (and I Feel Tired)

• LifeThis Just In: It’s time to look back at the year that was and set up some hopes and dreams for the year to come, or something like that.

Unchecked Writing

• AIThis Just In: I stopped using Grammarly; have you noticed? Plus, a deeper exploration into AI writing and my friend the em dash.

The Dream of EPCOT

• LifeThis Just In: Walt Disney’s community of tomorrow is a celebration of humanity and a prototype for how we should live. Maybe we should listen.

It’s Not All About the Benjamins

• PublishingThis Just In: Yet one more thing that Diddy was wrong about.

The Internet Was Doomed From the Start

• Featured • PublishingThis Just In: Maybe it’s time to rethink the entire internet.

Want to Write a Novel in November?

• CraftThis Just In: NaNoWriMo may be dead, but writers have two new options to help hit those writing goals.

Answers to a Few Questions

• CraftThis Just In: There were fewer questions than I anticipated, but I will answer them nonetheless.

What Questions Do You Have

• CraftThis Just In: I won’t be participating in Medium Day this year, but I still want to keep the spirit alive. Ask me anything.

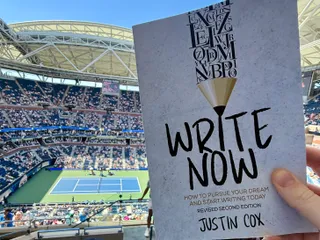

What I Did Different With This Book

• PublishingThis Just In: Launching a second edition wasn’t as simple as I thought it’d be, and I learned some lessons along the way.

Introducing Write Now’s Revised Second Edition!

• Featured • PublishingThis Just In: You can now access everything I’ve learned writing online over the last two-plus decades. Are you ready for it?

Can We Talk About Comments?

• PublishingThis Just In: Hearing from readers is a lot of fun until you start to get spammed with bots and AI nonsense farming for attention.

Let’s Talk About Tools

• TechThis Just In: There’s no single tool that can do everything and it’s extremely frustrating.

Battle of the Book Builders

• TechThis Just In: I tried to format my book using Vellum and Atticus. Instead, I learned something about app design and limitations.

Does My Journal Need a Backup

• TechThis Just In: I took a lot of your suggestions to heart and gave Obsidian a try. What I found was a bigger question.

Journals Aren’t Forever

• TechThis Just In: After over 13 years, I’ve deleted the Day One journal app. Here’s what it helped me realize about software subscriptions.

This One Has No Direction

• BurnoutThis Just In: Tried, drained, and a little burnt out isn’t exactly the best time to focus on your writing, but it’s why you do it anyway.

AI Exposes the Deeper Rifts in the Writing Industry

• AIThis Just In: Monetization turns passions into sweatshops and AI is making it worse.

The Cost of Rebellion

• Featured • Social MediaThis Just In: Rebellions are built on hope, but they require individual sacrifices for collective improvement.

Abuse of Power Comes for Nonprofits

• LifeThis Just In: Wikipedia’s 501(c)(3) tax exemption is threatened, but not by the IRS.

How to Move to Ghost In 2025

• PublishingThis Just In: Own your own publication by launching a website running Ghost. It’s not as difficult as it sounds.

AI Killed NaNoWriMo

• AIThis Just In: The writing month challenge may be dead, but there’s a new option to keep writers going.

The Age of Reaction

• Social MediaThis Just In: We’ve fallen into a dramascroll trap that will be very difficult to climb out of, but it isn’t impossible.

A Few More Thoughts on Copyright

• AIThis Just In: The history of copyright might be fraught, but it exposes a bigger issue when creating online.

Copyright in the Age of AI

• AIThis Just In: What does copyright do and does it even matter anymore?

Tapestry Is Weaving the Future Web

• TechThis Just In: The Iconfactory’s smash new app is a return to the web’s roots and where we all need to head.

The Cost of Simplification

• PublishingThis Just In: Owning your own platform can be complicated and sometimes simplifying can be costly.

A Bit About Me

• Featured • This Just InThis Just In: I answer interview questions that cover my views on writing and more.

The Perils of Personal Platforms

• PublishingWhat does it actually mean to leave the world of commercial platforms behind?

Update Those Mute Filters

• Social MediaThis Just In: Let’s collectively scream into the infinite abyss, find ourselves, and make the world better.

It’s Time to Rebel from Mass Market Social Media

• Featured • Social MediaThis Just In: IT is the villain in Silo. We should learn from those in the Down Deep and rise up.

The Forthcoming First Amendment Fight

• CraftThis Just In: So-called defenders of free speech are taking office, and we’re all in trouble. Plus, more predictions for 2025.

What Happens When Everything is Paywalled

• PublishingThis Just In: Wealth is becoming a determining factor in the type of World Wide Web you can access. And I’m not talking about speed.

Platforms Are Getting Much Worse

• PublishingThis Just In: Platforms want us to know exactly who controls the internet. It’s not us, but it can be!

Is Reading Dying

• CraftThis Just In: AI summaries and the pivot to video are bad news for the written word.

Empire Strikes Back Isn’t the End of the Series

• Featured • LifeThis Just In: Last week sucked, but there is always hope.

Are Apple’s Writing Tools the Right Stuff

• AIThis Just In: Apple Intelligence offers the boring version of AI I’ve hoped for, but is it helpful for writers?

This One’s for the Fans

• CultureThis Just In: Jimmy Buffet gets the due he deserves and shows what creative passion is all about.

When Creating Stops Being Fun

• CraftThis Just In: knowing when (and how) to hit delete is important for every creator’s sanity.

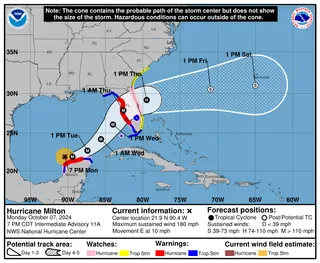

We Shouldn’t Have Taken Milton’s Stapler...

• LifeThis Just In: Hurricane Milton is becoming a real problem, and I’m exhausted.

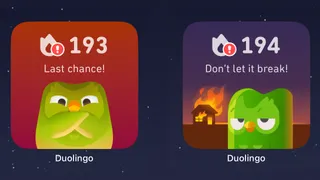

When Gamification Goes Awry

• TechWriting days, health rings, Duolingo… there are more streaks than time.

New Phone Who Dis

• TechNew technology fuels a desire to create but can also be overwhelming and lead to unmet expectations.

Hitting the Reset Button

• PublishingLLM scraping is a virus eating up the internet, but I’m done fighting. Instead, I choose open access and human connection.

Advice for Medium Writers Choose Publications Wisely

• PublishingJust because you CAN submit to a specific publication doesn’t mean you SHOULD.

Medium Day 2024: Questions I Didn't Have Time to Answer

• PublishingA collection of all the questions I didn’t have time for during my 30-minute Medium Day presentation.

Is Generative AI Destroying the Open Web

• AISubscription walls prevent AI scraping, but at what cost? I’m rethinking my whole publishing strategy.

Our Words Are Our Legacy

• CraftCreativity is a clash between individualism and our connection to history.

Fandom Is Being Ruined by "Fans"

• Featured • CultureHow review-bombing and constant, unfounded criticism takes agency away from creators

The Downside of Personal Platforms

• PublishingCreators need to think carefully about their personal sites and build in a way that prevents link rot.

Is Apple Intelligence the AI for the Rest of Us

• AIThis Just In: Apple’s forthcoming entry into AI promises a private, personalized AI, but will it increase AI slop?

Maybe I’m Bad at Social Media

• Social MediaSocial media “growth” requires giving in to quantity over quality. I don’t play that game.

Share, But Don’t Spoil

• PublishingA more personal internet relies on user recommendations but doesn’t spoil their experience.

Let’s Talk About Streaking

• BurnoutThis Just In: I’ve racked up a 56-day streak, but not in writing. Plus, I talk about Eurovision.

Chase Your Dreams and See What Happens

• LifeThis Just In: Mental health is a massive part of confidence and success. Dreams are inspiration. Use them.

Generative AI in Creativity

• AIThe reader survey results have some interesting things to say about generative AI and creativity. Here’s why that’s a problem.

What Is Your Freelance Writing Rate

• FreelancingWriting jobs are evaporating for many reasons, but freelance rates were really bad long before AI came around.

Why Criticize When You Can Celebrate?

• Featured • CraftThe attention economy destroyed our ability to dream for the sake of page views. It’s time we refocus our attention.

Can We Find a Balance With AI?

• AIThe dichotomy of AI continues to baffle me as I see the good and the bad. Where do we draw the line, and how do we learn to live with this technology?

Where Have All the Cowboys Gone

• Social MediaThis Just In: social media is bleeding users, but where are they going?

Write Like Taylor Swift

• CultureEmbrace life’s many eras and stop trying to be a one-dimensional writer.

Metrics Don’t Matter

• CraftHave we become so accustomed to seeing metrics everywhere that they no longer mean anything?

Celebrating a Decade on Medium

• Featured • PublishingLooking back at the past ten years of writing on Medium and what comes next.

Creation and Destruction Are Connected

• CraftThis Just In: The act of creating something is more important than the act of publishing what is made.

Don’t Take My Word for It

• CraftThis Just In: Personalized recommendations are the new algorithms and the best way to build a true audience.

Don’t Feed the AI Beast

• AIThis Just In: Justin’s writing requires a subscription to prevent AI abuse; consider your own precautions.

Sending Emails Is Hard

• PublishingThis Just In: Google and Yahoo crack down on bad behavior; set your DKIM, DMARC, and SPF records now.

Why Is Branding So Difficult?

• PublishingThis Just In: This Week In Writing rebrands; still explores the world with creativity and curiosity.

Why Make Anything if You Don’t Think It Will Be Great?

• CraftThis Week In Writing, we discuss greatness and how chasing it is a possible and noble goal.

Pay People Not Platforms

• PublishingThis Week In Writing, we look at why Substack’s collapse is actually a good thing for paid newsletters.

Let's Make the Internet Personal Again

• Featured • PublishingThis Week In Writing, we look at the once-in-a-generation opportunity to create a new internet filled with fun and originality.

Raising the Bar at the Writing Cooperative

• EditorialThis Week In Writing, we look at changes to our publication standards and what they mean for you.

Advent, Waiting, and the Year of Transitions

• LifeThis Week In Writing, we look back at the year that was and determine what it means for the year to come.

Refilling the Creativity Tank

• LifeThis Week In Writing, we discuss what happens when creativity finds other outlets.

Celebrate Giving Tuesday

• LifeThis Week In Writing, we take a quick break from our regularly scheduled programming to celebrate nonprofit organizations.

It’s Time We Discuss Medium

• PublishingThis Week In Writing, we address the platform that has supported my writing for nearly a decade.

My First Year on Mastodon and the Future of Social Media

• Social MediaThis Week In Writing, we look back at how social media fractured and why it’s a good thing for us all.

The Economics of a Self-Hosted Newsletter

• PublishingThis Week In Writing, we talk about what happens when you eliminate platforms and go after it on your own.

Trick or Treat?

• CraftThis Week In Writing, we talk about pen names and whether they make sense for writers.

A New Era Begins

• PublishingThis Week In Writing, we explore the internet’s current metamorphosis and how you can be part of the revolution.

My History of Blogging

• PublishingThis Week In Writing, we celebrate the blog, explore the pendulum of online writing, and double down on quality.

An Update on Spam Submissions

• EditorialThis Week In Writing, we talk about spam submissions to The Writing Cooperative and look at some of your thoughts on being called AI.

Would You Want to Know if I Thought Your Writing Sounded Like AI

• EditorialThis Week In Writing, we talk about submissions to The Writing Cooperative and how to avoid false accusations.

How I Feel About Engagement Numbers

• PublishingThis Week In Writing, we discuss what engagement means and if I get discouraged by a perceived lack thereof. Plus, a look at the future (again).

My Writing Is About Building Community

• PublishingThis Week In Writing, we highlight some of the people I’ve met writing online and answer some of your questions.

It’s Time for a Fresh Start

• PublishingThis Week In Writing, we talk about new Apple products, home renovations, and changes to the newsletter.

Choose Your Own Design

• PublishingThis Week In Writing, we explore the wonderful world of blogs, where writers truly get creative.

Expanding Universes Make Better Stories

• CultureThis Week In Writing, we look at how worldbuilding is an essential part of epic storytelling.

Your Questions Answered

• EditorialThis Week In Writing, we recap a successful Medium Day and address some of the questions I didn’t have time to answer.

Saving Frequently Isn’t The Only Way To Backup Your Writing

• CraftThis Week In Writing, we take a hard lesson from the latest Twitter/X hijinks. Plus, we look at what “human writing” means.

MIT Says ChatGPT Improves Bad Writing, But At What Cost?

• AIThis Week In Writing, we explore how ChatGPT and Grammarly are making us all sound the same.

Do CTAs Even Work Anymore?

• PublishingThis Week In Writing, we explore the “necessary evil” of calls to action and ask if they are any better than tacky banner ads.

AI Is Now Everywhere

• AIThis Week In Writing, we talk about Google’s new AI plan, what it means for writers, and why resistance is futile.

My Ghostly Strategy: Avoid the Graveyard

• PublishingThis Week In Writing, we fully explore how I’m building Ghost into a self-hosted content hub and how you can too.

Another Platform Collapses

• Social MediaThis Week In Writing, we talk about Reddit and what it means for centralized communities moving forward.

The Problem With Creative Entitlement

• AIThis Week In Writing, we explore how AI tools amplify the sometimes problematic relationship between creator and consumer

Creative Burnout and Why I’m Pausing The Writing Cooperative After 12 Years

• Featured • EditorialAlysa Liu's story is relatable and the timing is impeccable.

AI Is Not an All or Nothing Choice

• Featured • AIThis Just In: AI use isn't a moral binary. There's a practical middle path for writers.

The Internet Was Doomed From the Start

• Featured • PublishingThis Just In: Maybe it’s time to rethink the entire internet.

Introducing Write Now’s Revised Second Edition!

• Featured • PublishingThis Just In: You can now access everything I’ve learned writing online over the last two-plus decades. Are you ready for it?

The Cost of Rebellion

• Featured • Social MediaThis Just In: Rebellions are built on hope, but they require individual sacrifices for collective improvement.

A Bit About Me

• Featured • This Just InThis Just In: I answer interview questions that cover my views on writing and more.

It’s Time to Rebel from Mass Market Social Media

• Featured • Social MediaThis Just In: IT is the villain in Silo. We should learn from those in the Down Deep and rise up.

Empire Strikes Back Isn’t the End of the Series

• Featured • LifeThis Just In: Last week sucked, but there is always hope.

Fandom Is Being Ruined by "Fans"

• Featured • CultureHow review-bombing and constant, unfounded criticism takes agency away from creators

Why Criticize When You Can Celebrate?

• Featured • CraftThe attention economy destroyed our ability to dream for the sake of page views. It’s time we refocus our attention.

Celebrating a Decade on Medium

• Featured • PublishingLooking back at the past ten years of writing on Medium and what comes next.

Let's Make the Internet Personal Again

• Featured • PublishingThis Week In Writing, we look at the once-in-a-generation opportunity to create a new internet filled with fun and originality.

Write Now With Karen Dionne

• Featured • InterviewToday's Write Now interview features Karen Dionne, bestselling author of THE MARSH KING’S DAUGHTER and THE WICKED SISTER.

Write Now With R.L. Stine

• Featured • InterviewToday's Write Now interview features R.L. Stine, legendary author of GOOSEBUMPS and SOMETHING STRANGE ABOUT MY BRAIN.

Write Now With Rebecca Yarros

• Featured • InterviewToday's Write Now interview features Rebecca Yarros, New York Times bestselling author of FOURTH WING and IRON FLAME.

Write Now With Taylor Lorenz

• Featured • InterviewToday's Write Now interview features Taylor Lorenz, technology reporter and author of EXTREMELY ONLINE: THE UNTOLD STORY OF FAME, INFLUENCE, AND POWER ON THE INTERNET.

Rising From the Rubble of Institutions

• Featured • LifeWhat happens when everything we know falls apart? We redefine ourselves and seek a new path through life.

We Have to Talk About Substack

• Featured • PublishingThis Week In Writing, we talk about Diffusion of Innovation Theory and dying platforms.

The Era of Centralized Platforms Is Over

• Featured • PublishingThis Week In Writing, we discuss whether you should still own a website if you publish on Medium or Substack.

Are You Begging for Eyes in the Attention Economy

• Featured • PublishingThis Week In Writing, we explore the internet’s move away from the attention economy and how writers can make the web more personal

Write Now with Dan Moren

• Featured • InterviewToday’s Write Now interview features Dan Moren, prolific writer, podcaster, and author of THE NOVA INCIDENT.

Write Now with Neal Shusterman

• Featured • InterviewToday’s Write Now interview features Neal Shusterman, author of DRY, CHALLENGER DEEP, UNWIND, SYTHE, and the upcoming GLEANINGS.

I Wrote a Book!

• Featured • PublishingThis Week In Writing, I announce my new book and provide an update on the Flash Fiction Writing Challenge!

Write Now with Xiran Jay Zhao

• Featured • InterviewToday’s Write Now interview features Xiran Jay Zhao, New York Times bestselling author of IRON WIDOW.

Write Now with Lauren Gibaldi

• Featured • InterviewLauren Gibaldi is an author, anthologist, and librarian. Lauren shares how she wrote three books by aiming for 30-minutes of writing a day.

Are You Writing For An Audience Or Authenticity?

• Featured • CraftWhat Emily Dickinson’s fictitious life teaches about fame

I Bought a Selfie Ring Light

• Featured • LifeOne step in my journey to becoming an influencer/thought leader/whatever you want to call me

Write Now with Julia Cameron

• Featured • InterviewThe author behind Morning Pages shares her writing process

Write Now with Eric Smith

• Featured • InterviewHow an author and literary agent champions inclusive stories

Choosing Growth Over Fear In A Time Of Uncertainty

• Featured • CraftNASA's process of landing on the moon can teach us to start choosing growth when things are hard. Tackle little things one at a time.

How To Be A Professional Freelance Writer: Invest In Yourself

• Featured • FreelancingToday I have some advice for anyone looking to launch a career as a freelance writer: invest in yourself. You are worth it!

Write Now with Charles Soule

• Featured • InterviewHow a former lawyer became the author of Marvel’s Star Wars comic series

Write Now with Sarah Knight

• Featured • InterviewHow The Life-Changing Magic of Not Giving a Fuck went from an idea to a bestselling series

Write Now with Karen M. McManus

• Featured • InterviewHow a former marketer writes bestselling character-driven mysteries

Write Now with Pierce Brown

• Featured • InterviewToday's Write Now interview features Pierce Brown, the #1 New York Times Bestselling author of the Red Rising saga.

Write Now with Kristen Arnett

• Featured • InterviewToday's Write Now interview features Kristen Arnett, the New York Times bestselling author of Mostly Dead Things.

What We Can Learn About Writing from Bruce Springsteen

• Featured • CultureIt took Bruce Springsteen six months to write Born to Run. There are a lot of things writers can learn from his process and success.

Write Now with Andy Weir

• Featured • InterviewToday's Write Now interview features Andy Weir, the New York Times bestselling author of The Martian and Artemis.

Building The Writing Cooperative: How Internet Strangers Developed a Writing Community

• Featured • EditorialDespite its massive size, The Writing Cooperative started with just two people who never met.

A Love Letter to Entrepreneurship

• Featured • LifeSometimes you just need to tell some(thing) how you feel. This love letter to entrepreneurship conveys what it means to be a "founder."

Girls, Robots, and Rock: Tokyo’s Amazing Robot Restaurant

• Featured • TravelTokyo has a building with LED lights and chrome so bright it’s like staring into the sun. This is Shinjuku’s Robot Restaurant.

How To Write Blog Posts That People Actually Read

• Featured • CraftLet’s give the people what they really want.

The Kia Soul Conspiracy

• Featured • LifeDo you see a Kias at every turn? Do they follow you home and to work? Are they watching you right now? Welcome to the Kia Soul Conspiracy.

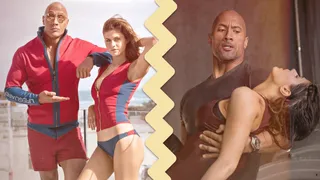

The Ultimate Dwayne Johnson and Alexandra Daddario Memorial Day Movie Showdown

• Featured • CultureComparing the disaster porn San Andreas to the beach porn Baywatch determines the ultimate Dwayne Johnson / Alexandra Daddario movie.

How I Became A Villain on Christian Radio

• Featured • Social MediaAnd a Social Media Expert at the Same Time

Overcoming Social Anxiety at a Video Game Convention

• Featured • CultureDiscover what it was like attending PAX in 2008, including meeting Felicia Day and experiencing social anxiety at the convention center, in this entertaining article.

The Grand Unified Theory of Scarlett Johansson Movies

• Featured • CultureWhat if all Scarlett Johansson movies are connected?

An Instagram Scavenger Hunt for Free Art Friday in Atlanta

• Featured • Social MediaHow Instagram and the #fafatl hashtag created the Free Art Friday scavenger hunt throughout Atlanta for free, original works of art.

Creative Burnout and Why I’m Pausing The Writing Cooperative After 12 Years

• Featured • EditorialAlysa Liu's story is relatable and the timing is impeccable.

What Bad Bunny Gets That NBC Doesn’t

• CultureThis Just In: NBC hosted the Olympics, the Super Bowl, and Bad Bunny’s halftime show on the same night, so why was their messaging so poor?

AI Is Not an All or Nothing Choice

• Featured • AIThis Just In: AI use isn't a moral binary. There's a practical middle path for writers.

It’s the End of the Year as We Know It (and I Feel Tired)

• LifeThis Just In: It’s time to look back at the year that was and set up some hopes and dreams for the year to come, or something like that.

Unchecked Writing

• AIThis Just In: I stopped using Grammarly; have you noticed? Plus, a deeper exploration into AI writing and my friend the em dash.

It’s Not All About the Benjamins

• PublishingThis Just In: Yet one more thing that Diddy was wrong about.

Want to Write a Novel in November?

• CraftThis Just In: NaNoWriMo may be dead, but writers have two new options to help hit those writing goals.

Answers to a Few Questions

• CraftThis Just In: There were fewer questions than I anticipated, but I will answer them nonetheless.

What Questions Do You Have

• CraftThis Just In: I won’t be participating in Medium Day this year, but I still want to keep the spirit alive. Ask me anything.

What I Did Different With This Book

• PublishingThis Just In: Launching a second edition wasn’t as simple as I thought it’d be, and I learned some lessons along the way.

Introducing Write Now’s Revised Second Edition!

• Featured • PublishingThis Just In: You can now access everything I’ve learned writing online over the last two-plus decades. Are you ready for it?

Let’s Talk About Tools

• TechThis Just In: There’s no single tool that can do everything and it’s extremely frustrating.

Battle of the Book Builders

• TechThis Just In: I tried to format my book using Vellum and Atticus. Instead, I learned something about app design and limitations.

Does My Journal Need a Backup

• TechThis Just In: I took a lot of your suggestions to heart and gave Obsidian a try. What I found was a bigger question.

Journals Aren’t Forever

• TechThis Just In: After over 13 years, I’ve deleted the Day One journal app. Here’s what it helped me realize about software subscriptions.

AI Exposes the Deeper Rifts in the Writing Industry

• AIThis Just In: Monetization turns passions into sweatshops and AI is making it worse.

AI Killed NaNoWriMo

• AIThis Just In: The writing month challenge may be dead, but there’s a new option to keep writers going.

A Few More Thoughts on Copyright

• AIThis Just In: The history of copyright might be fraught, but it exposes a bigger issue when creating online.

Copyright in the Age of AI

• AIThis Just In: What does copyright do and does it even matter anymore?

The Forthcoming First Amendment Fight

• CraftThis Just In: So-called defenders of free speech are taking office, and we’re all in trouble. Plus, more predictions for 2025.

Is Reading Dying

• CraftThis Just In: AI summaries and the pivot to video are bad news for the written word.

Are Apple’s Writing Tools the Right Stuff

• AIThis Just In: Apple Intelligence offers the boring version of AI I’ve hoped for, but is it helpful for writers?

This One’s for the Fans

• CultureThis Just In: Jimmy Buffet gets the due he deserves and shows what creative passion is all about.

When Creating Stops Being Fun

• CraftThis Just In: knowing when (and how) to hit delete is important for every creator’s sanity.

When Gamification Goes Awry

• TechWriting days, health rings, Duolingo… there are more streaks than time.

Medium Day 2024: Questions I Didn't Have Time to Answer

• PublishingA collection of all the questions I didn’t have time for during my 30-minute Medium Day presentation.

Our Words Are Our Legacy

• CraftCreativity is a clash between individualism and our connection to history.

Fandom Is Being Ruined by "Fans"

• Featured • CultureHow review-bombing and constant, unfounded criticism takes agency away from creators

Maybe I’m Bad at Social Media

• Social MediaSocial media “growth” requires giving in to quantity over quality. I don’t play that game.

Chase Your Dreams and See What Happens

• LifeThis Just In: Mental health is a massive part of confidence and success. Dreams are inspiration. Use them.

Generative AI in Creativity

• AIThe reader survey results have some interesting things to say about generative AI and creativity. Here’s why that’s a problem.

Why Criticize When You Can Celebrate?

• Featured • CraftThe attention economy destroyed our ability to dream for the sake of page views. It’s time we refocus our attention.

Write Like Taylor Swift

• CultureEmbrace life’s many eras and stop trying to be a one-dimensional writer.

Metrics Don’t Matter

• CraftHave we become so accustomed to seeing metrics everywhere that they no longer mean anything?

Celebrating a Decade on Medium

• Featured • PublishingLooking back at the past ten years of writing on Medium and what comes next.

Creation and Destruction Are Connected

• CraftThis Just In: The act of creating something is more important than the act of publishing what is made.

Don’t Take My Word for It

• CraftThis Just In: Personalized recommendations are the new algorithms and the best way to build a true audience.

Why Is Branding So Difficult?

• PublishingThis Just In: This Week In Writing rebrands; still explores the world with creativity and curiosity.

Why Make Anything if You Don’t Think It Will Be Great?

• CraftThis Week In Writing, we discuss greatness and how chasing it is a possible and noble goal.

Let's Make the Internet Personal Again

• Featured • PublishingThis Week In Writing, we look at the once-in-a-generation opportunity to create a new internet filled with fun and originality.

Advent, Waiting, and the Year of Transitions

• LifeThis Week In Writing, we look back at the year that was and determine what it means for the year to come.

Refilling the Creativity Tank

• LifeThis Week In Writing, we discuss what happens when creativity finds other outlets.

Trick or Treat?

• CraftThis Week In Writing, we talk about pen names and whether they make sense for writers.

A New Era Begins

• PublishingThis Week In Writing, we explore the internet’s current metamorphosis and how you can be part of the revolution.

My History of Blogging

• PublishingThis Week In Writing, we celebrate the blog, explore the pendulum of online writing, and double down on quality.

How I Feel About Engagement Numbers

• PublishingThis Week In Writing, we discuss what engagement means and if I get discouraged by a perceived lack thereof. Plus, a look at the future (again).

My Writing Is About Building Community

• PublishingThis Week In Writing, we highlight some of the people I’ve met writing online and answer some of your questions.

It’s Time for a Fresh Start

• PublishingThis Week In Writing, we talk about new Apple products, home renovations, and changes to the newsletter.

Choose Your Own Design

• PublishingThis Week In Writing, we explore the wonderful world of blogs, where writers truly get creative.

Expanding Universes Make Better Stories

• CultureThis Week In Writing, we look at how worldbuilding is an essential part of epic storytelling.

Your Questions Answered

• EditorialThis Week In Writing, we recap a successful Medium Day and address some of the questions I didn’t have time to answer.

Saving Frequently Isn’t The Only Way To Backup Your Writing

• CraftThis Week In Writing, we take a hard lesson from the latest Twitter/X hijinks. Plus, we look at what “human writing” means.

MIT Says ChatGPT Improves Bad Writing, But At What Cost?

• AIThis Week In Writing, we explore how ChatGPT and Grammarly are making us all sound the same.

Do CTAs Even Work Anymore?

• PublishingThis Week In Writing, we explore the “necessary evil” of calls to action and ask if they are any better than tacky banner ads.

My Ghostly Strategy: Avoid the Graveyard

• PublishingThis Week In Writing, we fully explore how I’m building Ghost into a self-hosted content hub and how you can too.

This Just in Comes Home

• PublishingWelcome to the first issue of This Just In completely managed from my website!

How Do You End Things Well

• CultureSuccession and Ted Lasso ended last week. Both had a distinct impact on culture and were met with intense anticipation despite relatively small audiences. Don't worry, there aren't any real spoilers in this article. I enjoyed both endings for different reasons. Succession brought a sense of

My Return to Journaling Failed Miserably

• LifeThis Week In Writing, we talk about good intentions, rumored Apple products, and buying domain names

Let's Talk About Numbers

• PublishingThis Week In Writing, we talk about the importance of metrics and why I barely pay attention to mine.

ChatGPT, the Writer’s Strike, and the Future of Content Writing

• AIThis Week In Writing, we explore a middle-of-the-road approach to ChatGPT and the future of writing

BlueSky, Mastodon, and Notes; Oh, My!

• Social MediaThis Week In Writing, we talk about all the “Twitter Alternatives” and what makes the most sense for writers.

On Tennis and Writing Breaks

• LifeThis Week In Writing, I discuss my prolonged break from daily writing and follow up on last week’s Substack article.

Stop Creating Quantity and Start Creating Quality

• EditorialThis Week In Writing, we discuss Medium’s new Boost program and why the vast majority of submissions lately have been atrocious.

How I Use Midjourney to Create Featured Images for Articles

• AIGenerating unique and interesting featured images, you only need a Discord account and a little patience. Here’s how I use the tool.

You Have Questions, I May Have Answers

• CraftThis Week In Writing, we celebrate International Question Day by listening to Selena Gomez. What does that have in common? Keep reading!

AI Is Coming for Content Creators

• AIThis Week In Writing, we look at how AI is changing the content landscape and why that might be a good thing.

The Era of Centralized Platforms Is Over

• Featured • PublishingThis Week In Writing, we discuss whether you should still own a website if you publish on Medium or Substack.

How Will History Remember Your Writing?

• CraftThis Week In Writing, we talk about the magic found in old books

How I Come Up With Writing Topics

• CultureThis Week In Writing, we explore topic generation while celebrating the best damn band in the land!

Introducing My Writing Community!

• EditorialA new way to connect with writers, discuss your interests, and receive feedback on your creative endeavors.

Are You Begging for Eyes in the Attention Economy

• Featured • PublishingThis Week In Writing, we explore the internet’s move away from the attention economy and how writers can make the web more personal

Use Better Words to Be More Inclusive

• CraftThis Week In Writing, we talk about words to avoid in 2023, a special offer from a friend, and Medium joining Mastodon

What Biases Do You Bring to Your Projects

• CraftThis Week In Writing, we explore biases in our creative pursuits and how those biases can translate to AI-generated content.

Welcome to 2023. Now Take A Nap.

• CraftThis Week In Writing, we kick off a new year with a chat about goals, self-care, and naps.

I Created a New Language in 5th Grade

• LifeThis Week In Writing, we explore our digital legacies, discuss permanence, and close out the year with something new.

What’s the Last Book You Read

• Crafthttps://writingcooperative.com/whats-the-last-book-you-read-5265b44e180e

Success Comes to Those Who Work for It (Usually)

• CraftThis Week In Writing, we talk about success and perseverance through the lens of Simu Liu’s memoir. Oh, and AI writing, too.

Would You Burn Your Entire Archive

• CraftThis Week In Writing, we contemplate throwing out our leftovers and slimming down our digital presence.

Give Thanks to Our AI Overlords

• AIThis Week In Writing, we celebrate Thanksgiving and dive into the ever-improving AI-generated content.

Do You Procrastawrite

• CraftThis Week In Writing, we talk about procrastination and everything we do instead of writing.

Let’s Talk About Money

• FreelancingThis Week In Writing, we talk about earning money as a writer online and check in on NaNoWriMo.

Happy Author’s Day

• CraftThis Week In Writing, we kick off NaNoWriMo by celebrating all the author’s out there, whether published or not.

You’re Invited

• CraftThis Week In Writing, we prepare for NaNoWriMo with a special invitation, but first, we talk about She-Hulk!

Get Ready for NaNoWriMo

• CraftThis Week In Writing, we prepare for National Novel Writing Month (NaNoWriMo) with encouragement and a special offer.

How Do You Deliver Joy

• CraftThis Week In Writing, we discuss how to find your joy and how to spread joy to others.

Let’s Taco ‘Bout Giving the Reader More

• CraftThis Week In Writing, we celebrate National Taco Day by discussing ways to hook the reader and give them more to chew on.

Stop Making Excuses and Write

• CraftThis Week In Writing, we explore excuses we use to avoid writing and discuss methods to get out of our own way.

Did You Hug Your Boss Today?

• CraftThis Week In Writing, we explore inappropriate workplace dynamics and how that applies to writers.

How Do You Fight Procrastination?

• CraftThis Week In Writing, we explore the bane of most writers’ existence: procrastination. And, yes, it’s different from Writer’s Block.

This Just In: Thank You, Subscribers

• PublishingI don’t know who you are, but I’m grateful for your support, and I hope you enjoy all the things you read.

What Word Makes You Cringe?

• CraftThis Week In Writing, we talk about cringe-worthy words and give a nod to puns, courtesy of Letterkenny.

This Is a Bit Revealing

• CraftThis Week In Writing, I reveal my inner nerd by sharing a personal project. Plus, we look at character creation.

The Stats I Track

• CraftThis Week In Writing, we explore which stats are necessary to track and which are safe to ignore.

Do You Color Outside the Lines?

• CraftThis Week In Writing, we explore taking our writing to places the reader doesn’t expect, like in the film Everything Everywhere All At Once.

Writing Is Exploring The Unknown

• CraftThis Week In Writing, we explore all-or-nothing thinking and learn how to live in the unknown within our work and ourselves.

Write Now is My Tribe of Mentors

• CraftWhat I learned from Tim Ferriss’ Tribe of Mentors and my answers to his 11 great questions.

When Writing Gets Controversial

• CraftThis Week In Writing, we explore the controversial origins of the bikini and how our writing can stoke controversy of its own.

Make Your Writing Space More Comfortable

• CraftThis Month In Writing, we explore simple ways to improve your writing space and the best advice published in June.