ChatGPT, the Writer’s Strike, and the Future of Content Writing

This Week In Writing, we explore a middle-of-the-road approach to ChatGPT and the future of writing

The Writers Guild of America is on strike. I'm not fully versed on the provisions the Guild is striking over, but I know one of them is protections against the absolute tidal wave of AI. AI can't write with feeling or emotion (yet), but the WGA is wise to address the inevitable point where it will.

AI developments are coming fast and furious and are honestly hard to keep up with. While not a scientific poll, I'm using ChatGPT more often in everyday situations and know that my colleagues are, too. Today, I want to address the evolving state of AI writing tools, how to potentially use them responsibly, and what it means for the future of writers.

First, this is one of those situations where views change as the technology evolves. I've always looked at generative AI as a functional tool writers can use in their arsenal, but not something that should be used solely to create "content" (boy, do I dislike that word). I'm sticking with this stance, but the lines are starting to blur.

Currently, The Writing Cooperative rules state you must disclose the use of a generative AI. Not one submission in the last four weeks has done so. Does that mean no one used ChatGPT to build their submissions? Maybe. Though, I find it highly unlikely. Someone on one of the channels recently questioned the policy, asking what happens when all writing tools and apps have generative AI built in. It's a really good question.

Let's look at Grammarly for a minute. Technically, Grammarly has always been an AI company. Their fancy algorithm determines the most likely order of words, and it considers that arrangement grammatically correct. This description is an oversimplification, but it works. Now, Grammarly is going deeper into germinative AI with their Go product. Is it different from what they've been offering simply because it creates longer passages? I don't know. I don't, however, think writers need to disclose when they use Grammarly. So what does that say?

Lately, I've used ChatGPT for multiple projects in what I think are responsible ways. Here are a few ways I've used the tool recently:

- Revising existing passages by using the prompt "revise this:" and entering the paragraph;

- Asking for subheadings when my mind draws a blank by using the prompt "what is a one-word subheading for the following paragraph:" and entering the text;

- Taking my bullet point notes from client calls and asking ChatGPT to put them into complete sentences using the prompt "take the following notes and turn them into coherent sentences:" and then entering my bullet points.

Additionally, I've been working with the ChatGPT API to essentially build a fancy MadLib for my nonprofit clients. They'll eventually input a few pieces of information, which I'll use behind the scenes to combine into a text prompt run through the API. Ultimately, this will help clients better express their ideas and provide me with better information when working with them.

I'd like to think these are all responsible ways to use ChatGPT in my regular writing process. However, I'm torn with the dichotomy here. On one hand, as a writer myself, I want to advocate for others and their livelihoods. Writers should be paid for their work, and the WGA is right to ask for AI protections. On the other hand, I see how ChatGPT saves me time and enhances my existing workflow. Like Natalie Imbruglia, I'm torn.

I still don't think generative AI should be used to solely create entertainment. I don't want to read a personal essay penned by an AI, nor do I think the next blockbuster film should be written by an AI that knows what will likely make the most money. Will I notice these things when they happen? Maybe at first, but over time, probably not.

What do you think? Are you torn like I am, or are your views of ChatGPT and generative AI rock solid?

PS: Besides Grammarly and asking how to spell Imbruglia, everything in this article came out of my head.

Let's check in on Bluesky...

After talking about Bluesky and other "Twitter alternatives" last week, one of you kind people gave me an invite to the platform. My initial impression? It's chaotic.

I think Bluesky is intentionally inviting journalists, Twitter clout chasers, and meme lords in the first wave to try and garner some of this initial hype. It's why you keep seeing articles that say Bluesky is the next great thing despite only having roughly 60k users.

To me, Bluesky is the latest version of Clubhouse, the overhyped social platform that quickly rose to prominence and just as quickly died. It's invite-only and going after the "cool kids" from Twitter. Sure, it creates an initial buzz, but it didn't work out for Clubhouse. Maybe it will for Bluesky? I don't know.

Scrolling through Bluesky feels like the tech equivalent of the White House Correspondent’s Dinner mixed with dick jokes. It's a bunch of political and journalist nerds mixed with shitposters. That’s not inherently a bad thing, but is that what we want from social media? Or is that exactly what we want from social media?

Related Posts

Don’t Take My Word for It

• CraftThis Just In: Personalized recommendations are the new algorithms and the best way to build a true audience.

Why Make Anything if You Don’t Think It Will Be Great?

• CraftThis Week In Writing, we discuss greatness and how chasing it is a possible and noble goal.

Pay People Not Platforms

• PublishingThis Week In Writing, we look at why Substack’s collapse is actually a good thing for paid newsletters.

Let's Make the Internet Personal Again

• Featured • PublishingThis Week In Writing, we look at the once-in-a-generation opportunity to create a new internet filled with fun and originality.

Raising the Bar at the Writing Cooperative

• EditorialThis Week In Writing, we look at changes to our publication standards and what they mean for you.

Advent, Waiting, and the Year of Transitions

• LifeThis Week In Writing, we look back at the year that was and determine what it means for the year to come.

Refilling the Creativity Tank

• LifeThis Week In Writing, we discuss what happens when creativity finds other outlets.

Celebrate Giving Tuesday

• LifeThis Week In Writing, we take a quick break from our regularly scheduled programming to celebrate nonprofit organizations.

It’s Time We Discuss Medium

• PublishingThis Week In Writing, we address the platform that has supported my writing for nearly a decade.

My First Year on Mastodon and the Future of Social Media

• Social MediaThis Week In Writing, we look back at how social media fractured and why it’s a good thing for us all.

The Economics of a Self-Hosted Newsletter

• PublishingThis Week In Writing, we talk about what happens when you eliminate platforms and go after it on your own.

Trick or Treat?

• CraftThis Week In Writing, we talk about pen names and whether they make sense for writers.

A New Era Begins

• PublishingThis Week In Writing, we explore the internet’s current metamorphosis and how you can be part of the revolution.

My History of Blogging

• PublishingThis Week In Writing, we celebrate the blog, explore the pendulum of online writing, and double down on quality.

An Update on Spam Submissions

• EditorialThis Week In Writing, we talk about spam submissions to The Writing Cooperative and look at some of your thoughts on being called AI.

Would You Want to Know if I Thought Your Writing Sounded Like AI

• EditorialThis Week In Writing, we talk about submissions to The Writing Cooperative and how to avoid false accusations.

How I Feel About Engagement Numbers

• PublishingThis Week In Writing, we discuss what engagement means and if I get discouraged by a perceived lack thereof. Plus, a look at the future (again).

My Writing Is About Building Community

• PublishingThis Week In Writing, we highlight some of the people I’ve met writing online and answer some of your questions.

It’s Time for a Fresh Start

• PublishingThis Week In Writing, we talk about new Apple products, home renovations, and changes to the newsletter.

Choose Your Own Design

• PublishingThis Week In Writing, we explore the wonderful world of blogs, where writers truly get creative.

Expanding Universes Make Better Stories

• CultureThis Week In Writing, we look at how worldbuilding is an essential part of epic storytelling.

Your Questions Answered

• EditorialThis Week In Writing, we recap a successful Medium Day and address some of the questions I didn’t have time to answer.

Saving Frequently Isn’t The Only Way To Backup Your Writing

• CraftThis Week In Writing, we take a hard lesson from the latest Twitter/X hijinks. Plus, we look at what “human writing” means.

MIT Says ChatGPT Improves Bad Writing, But At What Cost?

• AIThis Week In Writing, we explore how ChatGPT and Grammarly are making us all sound the same.

Do CTAs Even Work Anymore?

• PublishingThis Week In Writing, we explore the “necessary evil” of calls to action and ask if they are any better than tacky banner ads.

AI Is Not an All or Nothing Choice

• Featured • AIThis Just In: AI use isn't a moral binary. There's a practical middle path for writers.

It’s Time to Rebel from Mass Market Social Media

• Featured • Social MediaThis Just In: IT is the villain in Silo. We should learn from those in the Down Deep and rise up.

Platforms Are Getting Much Worse

• PublishingThis Just In: Platforms want us to know exactly who controls the internet. It’s not us, but it can be!

Where Have All the Cowboys Gone

• Social MediaThis Just In: social media is bleeding users, but where are they going?

Let's Make the Internet Personal Again

• Featured • PublishingThis Week In Writing, we look at the once-in-a-generation opportunity to create a new internet filled with fun and originality.

My First Year on Mastodon and the Future of Social Media

• Social MediaThis Week In Writing, we look back at how social media fractured and why it’s a good thing for us all.

Saving Frequently Isn’t The Only Way To Backup Your Writing

• CraftThis Week In Writing, we take a hard lesson from the latest Twitter/X hijinks. Plus, we look at what “human writing” means.

AI Is Now Everywhere

• AIThis Week In Writing, we talk about Google’s new AI plan, what it means for writers, and why resistance is futile.

Another Platform Collapses

• Social MediaThis Week In Writing, we talk about Reddit and what it means for centralized communities moving forward.

ChatGPT, the Writer’s Strike, and the Future of Content Writing

• AIThis Week In Writing, we explore a middle-of-the-road approach to ChatGPT and the future of writing

BlueSky, Mastodon, and Notes; Oh, My!

• Social MediaThis Week In Writing, we talk about all the “Twitter Alternatives” and what makes the most sense for writers.

We Have to Talk About Platform Proliferation

• Social MediaThis Week In Writing, we ask why no platform is content on doing one thing well and instead want to do all things poorly.

We Have to Talk About Substack

• Featured • PublishingThis Week In Writing, we talk about Diffusion of Innovation Theory and dying platforms.

The Era of Centralized Platforms Is Over

• Featured • PublishingThis Week In Writing, we discuss whether you should still own a website if you publish on Medium or Substack.

Introducing My Writing Community!

• EditorialA new way to connect with writers, discuss your interests, and receive feedback on your creative endeavors.

Use Better Words to Be More Inclusive

• CraftThis Week In Writing, we talk about words to avoid in 2023, a special offer from a friend, and Medium joining Mastodon

I Created a New Language in 5th Grade

• LifeThis Week In Writing, we explore our digital legacies, discuss permanence, and close out the year with something new.

Would You Burn Your Entire Archive

• CraftThis Week In Writing, we contemplate throwing out our leftovers and slimming down our digital presence.

The Day Twitter Died

• Social MediaWe’ll be singing, “Bye-bye, Miss American Pie. Drove my Tesla to the office, but there was just one guy.”

This Just in: Will Twitter Verification Save Twitter

• Social MediaElon Musk wants everyone to pay $8/month for Twitter verification, but will that save the platform or alienate people?

The Fate of The Seven Kingdoms

• Social MediaThe future on social media is much like the Game of Thrones. Right now, the only thing missing is a dragon.

Write Now is My Tribe of Mentors

• CraftWhat I learned from Tim Ferriss’ Tribe of Mentors and my answers to his 11 great questions.

Spring Into the Best Twitter Client You’ve Never Heard Of

• Social MediaHow does the Spring Twitter client by Junyu Kuang stack up to Tweetbot and Twitterrific?

How I Edit and Manage The Writing Cooperative

• EditorialWhat writers and editors can learn from my experience editing The Writing Cooperative, one of Medium’s top publications

You Should Be on Twitter

• Social MediaThis Week In Writing, we explore how I’m shocked how many writers don’t take advantage of Twitter’s potential for writers.

AI Is Not an All or Nothing Choice

• Featured • AIThis Just In: AI use isn't a moral binary. There's a practical middle path for writers.

Unchecked Writing

• AIThis Just In: I stopped using Grammarly; have you noticed? Plus, a deeper exploration into AI writing and my friend the em dash.

Want to Write a Novel in November?

• CraftThis Just In: NaNoWriMo may be dead, but writers have two new options to help hit those writing goals.

AI Exposes the Deeper Rifts in the Writing Industry

• AIThis Just In: Monetization turns passions into sweatshops and AI is making it worse.

AI Killed NaNoWriMo

• AIThis Just In: The writing month challenge may be dead, but there’s a new option to keep writers going.

A Few More Thoughts on Copyright

• AIThis Just In: The history of copyright might be fraught, but it exposes a bigger issue when creating online.

Copyright in the Age of AI

• AIThis Just In: What does copyright do and does it even matter anymore?

Is Reading Dying

• CraftThis Just In: AI summaries and the pivot to video are bad news for the written word.

Are Apple’s Writing Tools the Right Stuff

• AIThis Just In: Apple Intelligence offers the boring version of AI I’ve hoped for, but is it helpful for writers?

Hitting the Reset Button

• PublishingLLM scraping is a virus eating up the internet, but I’m done fighting. Instead, I choose open access and human connection.

Is Generative AI Destroying the Open Web

• AISubscription walls prevent AI scraping, but at what cost? I’m rethinking my whole publishing strategy.

Is Apple Intelligence the AI for the Rest of Us

• AIThis Just In: Apple’s forthcoming entry into AI promises a private, personalized AI, but will it increase AI slop?

Generative AI in Creativity

• AIThe reader survey results have some interesting things to say about generative AI and creativity. Here’s why that’s a problem.

What Is Your Freelance Writing Rate

• FreelancingWriting jobs are evaporating for many reasons, but freelance rates were really bad long before AI came around.

Can We Find a Balance With AI?

• AIThe dichotomy of AI continues to baffle me as I see the good and the bad. Where do we draw the line, and how do we learn to live with this technology?

Don’t Feed the AI Beast

• AIThis Just In: Justin’s writing requires a subscription to prevent AI abuse; consider your own precautions.

An Update on Spam Submissions

• EditorialThis Week In Writing, we talk about spam submissions to The Writing Cooperative and look at some of your thoughts on being called AI.

Would You Want to Know if I Thought Your Writing Sounded Like AI

• EditorialThis Week In Writing, we talk about submissions to The Writing Cooperative and how to avoid false accusations.

Saving Frequently Isn’t The Only Way To Backup Your Writing

• CraftThis Week In Writing, we take a hard lesson from the latest Twitter/X hijinks. Plus, we look at what “human writing” means.

MIT Says ChatGPT Improves Bad Writing, But At What Cost?

• AIThis Week In Writing, we explore how ChatGPT and Grammarly are making us all sound the same.

AI Is Now Everywhere

• AIThis Week In Writing, we talk about Google’s new AI plan, what it means for writers, and why resistance is futile.

The Problem With Creative Entitlement

• AIThis Week In Writing, we explore how AI tools amplify the sometimes problematic relationship between creator and consumer

ChatGPT, the Writer’s Strike, and the Future of Content Writing

• AIThis Week In Writing, we explore a middle-of-the-road approach to ChatGPT and the future of writing

How I Use Midjourney to Create Featured Images for Articles

• AIGenerating unique and interesting featured images, you only need a Discord account and a little patience. Here’s how I use the tool.

Creative Burnout and Why I’m Pausing The Writing Cooperative After 12 Years

• Featured • EditorialAlysa Liu's story is relatable and the timing is impeccable.

What Bad Bunny Gets That NBC Doesn’t

• CultureThis Just In: NBC hosted the Olympics, the Super Bowl, and Bad Bunny’s halftime show on the same night, so why was their messaging so poor?

AI Is Not an All or Nothing Choice

• Featured • AIThis Just In: AI use isn't a moral binary. There's a practical middle path for writers.

It’s the End of the Year as We Know It (and I Feel Tired)

• LifeThis Just In: It’s time to look back at the year that was and set up some hopes and dreams for the year to come, or something like that.

Unchecked Writing

• AIThis Just In: I stopped using Grammarly; have you noticed? Plus, a deeper exploration into AI writing and my friend the em dash.

The Dream of EPCOT

• LifeThis Just In: Walt Disney’s community of tomorrow is a celebration of humanity and a prototype for how we should live. Maybe we should listen.

It’s Not All About the Benjamins

• PublishingThis Just In: Yet one more thing that Diddy was wrong about.

The Internet Was Doomed From the Start

• Featured • PublishingThis Just In: Maybe it’s time to rethink the entire internet.

Want to Write a Novel in November?

• CraftThis Just In: NaNoWriMo may be dead, but writers have two new options to help hit those writing goals.

Answers to a Few Questions

• CraftThis Just In: There were fewer questions than I anticipated, but I will answer them nonetheless.

What Questions Do You Have

• CraftThis Just In: I won’t be participating in Medium Day this year, but I still want to keep the spirit alive. Ask me anything.

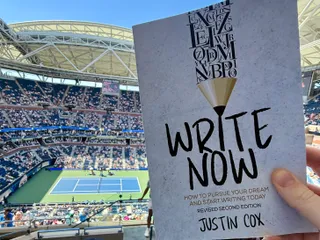

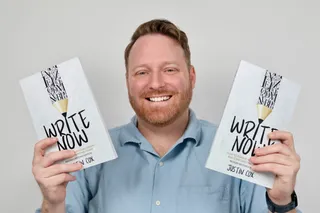

What I Did Different With This Book

• PublishingThis Just In: Launching a second edition wasn’t as simple as I thought it’d be, and I learned some lessons along the way.

Introducing Write Now’s Revised Second Edition!

• Featured • PublishingThis Just In: You can now access everything I’ve learned writing online over the last two-plus decades. Are you ready for it?

Can We Talk About Comments?

• PublishingThis Just In: Hearing from readers is a lot of fun until you start to get spammed with bots and AI nonsense farming for attention.

Let’s Talk About Tools

• TechThis Just In: There’s no single tool that can do everything and it’s extremely frustrating.

Battle of the Book Builders

• TechThis Just In: I tried to format my book using Vellum and Atticus. Instead, I learned something about app design and limitations.

Does My Journal Need a Backup

• TechThis Just In: I took a lot of your suggestions to heart and gave Obsidian a try. What I found was a bigger question.

Journals Aren’t Forever

• TechThis Just In: After over 13 years, I’ve deleted the Day One journal app. Here’s what it helped me realize about software subscriptions.

This One Has No Direction

• BurnoutThis Just In: Tried, drained, and a little burnt out isn’t exactly the best time to focus on your writing, but it’s why you do it anyway.

AI Exposes the Deeper Rifts in the Writing Industry

• AIThis Just In: Monetization turns passions into sweatshops and AI is making it worse.

The Cost of Rebellion

• Featured • Social MediaThis Just In: Rebellions are built on hope, but they require individual sacrifices for collective improvement.

Abuse of Power Comes for Nonprofits

• LifeThis Just In: Wikipedia’s 501(c)(3) tax exemption is threatened, but not by the IRS.

How to Move to Ghost In 2025

• PublishingThis Just In: Own your own publication by launching a website running Ghost. It’s not as difficult as it sounds.

Creative Burnout and Why I’m Pausing The Writing Cooperative After 12 Years

• Featured • EditorialAlysa Liu's story is relatable and the timing is impeccable.

What Bad Bunny Gets That NBC Doesn’t

• CultureThis Just In: NBC hosted the Olympics, the Super Bowl, and Bad Bunny’s halftime show on the same night, so why was their messaging so poor?

AI Is Not an All or Nothing Choice

• Featured • AIThis Just In: AI use isn't a moral binary. There's a practical middle path for writers.

It’s the End of the Year as We Know It (and I Feel Tired)

• LifeThis Just In: It’s time to look back at the year that was and set up some hopes and dreams for the year to come, or something like that.

Unchecked Writing

• AIThis Just In: I stopped using Grammarly; have you noticed? Plus, a deeper exploration into AI writing and my friend the em dash.

It’s Not All About the Benjamins

• PublishingThis Just In: Yet one more thing that Diddy was wrong about.

Want to Write a Novel in November?

• CraftThis Just In: NaNoWriMo may be dead, but writers have two new options to help hit those writing goals.

Answers to a Few Questions

• CraftThis Just In: There were fewer questions than I anticipated, but I will answer them nonetheless.

What Questions Do You Have

• CraftThis Just In: I won’t be participating in Medium Day this year, but I still want to keep the spirit alive. Ask me anything.

What I Did Different With This Book

• PublishingThis Just In: Launching a second edition wasn’t as simple as I thought it’d be, and I learned some lessons along the way.

Introducing Write Now’s Revised Second Edition!

• Featured • PublishingThis Just In: You can now access everything I’ve learned writing online over the last two-plus decades. Are you ready for it?

Let’s Talk About Tools

• TechThis Just In: There’s no single tool that can do everything and it’s extremely frustrating.

Battle of the Book Builders

• TechThis Just In: I tried to format my book using Vellum and Atticus. Instead, I learned something about app design and limitations.

Does My Journal Need a Backup

• TechThis Just In: I took a lot of your suggestions to heart and gave Obsidian a try. What I found was a bigger question.

Journals Aren’t Forever

• TechThis Just In: After over 13 years, I’ve deleted the Day One journal app. Here’s what it helped me realize about software subscriptions.

AI Exposes the Deeper Rifts in the Writing Industry

• AIThis Just In: Monetization turns passions into sweatshops and AI is making it worse.

AI Killed NaNoWriMo

• AIThis Just In: The writing month challenge may be dead, but there’s a new option to keep writers going.

A Few More Thoughts on Copyright

• AIThis Just In: The history of copyright might be fraught, but it exposes a bigger issue when creating online.

Copyright in the Age of AI

• AIThis Just In: What does copyright do and does it even matter anymore?

The Forthcoming First Amendment Fight

• CraftThis Just In: So-called defenders of free speech are taking office, and we’re all in trouble. Plus, more predictions for 2025.

Is Reading Dying

• CraftThis Just In: AI summaries and the pivot to video are bad news for the written word.

Are Apple’s Writing Tools the Right Stuff

• AIThis Just In: Apple Intelligence offers the boring version of AI I’ve hoped for, but is it helpful for writers?

This One’s for the Fans

• CultureThis Just In: Jimmy Buffet gets the due he deserves and shows what creative passion is all about.

When Creating Stops Being Fun

• CraftThis Just In: knowing when (and how) to hit delete is important for every creator’s sanity.

When Gamification Goes Awry

• TechWriting days, health rings, Duolingo… there are more streaks than time.